Now let’s talk about how Swift takes a slightly different approach in consistent hashing algorithm, and talk about the importance of rings in Swift.

Please note: After referring to Swift articles few times, it is my belief that the terms drive and devices are used interchangeably, so I’ll be doing the same here.

On the last entry, I mentioned how some objects have to move to another drive when I add or remove a drive from the cluster. What happens to that object being transported? It won’t be available for use, and we wouldn’t want to wait for that object to move to another drive; we want it to be available at all times. Swift builds the ring (and rebuilds it by executing the rebalance command when necessary) to ensure the data is distributed throughout the ring evenly.

As far as I know, there are 3 types of rings that I have read so far: account rings, container rings, and object rings. To read more about what I found about the relations among account, container, and object, see Basic Architecture and Data Model of OpenStack Swift.

1. Partitions and Partition Power

Swift uses a partition, each with a fixed width. Those partitions are then assigned to drives by using a placement algorithm.

While Swift creates a cluster, it picks an integer – a partition power. It uses the value of partition power to calculate the total number of partitions to assign to the drives in the cluster. Let N = total number of partitions, and p = partition power:

N = 2p

Let’s say that Swift chose 7 for the partition power. In that case, the cluster will have 27 = 128 partitions, which will then be mapped to the available drives in the cluster. What’s good about this is that in the cluster, the number of partitions will stay the same at all times, although the number of drives may change, whether it is added or removed.

But that’s not all.

2. Replica Count and Replica Locks

That’s what Replica count is the number of partition copies – this is what makes Swift redundant; it keeps multiple copies of each partition to place across the cluster. Say that I have a replica count of three: Each partition will have 3 copies, and each copy of those partitions will be distributed among different devices in the cluster – helps with redundancy.

It helps us to have higher replica count of partitions; it keeps us more protected against losing data, or data not being available. Should the device be added or removed, and the partitions are moving to different devices, we still have other replica available.

Let’s say that I’m moving a partition, let’s call it partition_1, to another device. While one copy of partition_1 is being moved, replicas of that partition_1 should not be moved, so Swift uses replica locks to lock those replicas, so they won’t be able to move to another device to ensure availability of those replicas.

3. Data Distribution

Swift uses two other mechanisms to evenly distribute the data across the cluster.

3.1. Weight

Remember that I mentioned that data needs to be evenly distributed to help with the load balance when I talked about consistent hashing algorithm? (See Multiple Markers in Consistent Hashing Algorithm section) It appears the first mechanism, weight, helps the cluster to decide which partition power to choose, and to calculate a specific number of partitions needs to be assigned to each drive. This is a user-defined value during the cluster creation and also used when re-balancing the rings: that is, Swift will re-build the rings to evenly distribute data among different drives. Higher weight means higher number of partitions needs to be created, for one, so higher number of partitions need to be assigned to the drives.

3.2. Unique-as-possible

Second mechanism that Swift uses is more like a placement algorithm, called unique-as-possible. Simply put, this is an algorithm that finds the region ⇒ zone ⇒ ( <ip-address>:<port-number> ) that are not used as much compared to other regions, zones, and servers, in that order. If necessary, it will also find the drive that is not used as much. Once found, Swift places the partitions in them.

4. Ring Data Structures

Every time the ring is built (and rebuilt), it seems that two important data structures are also created as well: device list and device look-up table. Knowing that proxy server handles the REST calls from client, it is my belief that the proxy server relies on these two data structures to deal with the incoming/outgoing objects accordingly.

4.1. Device Look-up Table

Device Look-up Table contains an information that proxy server process looks up to find which device a certain data is located in. Say the client sends a GET request to download an object. It would calculate the hash value of that object sent with the GET request to map to the specific partition value. Also remember, the partitions are replicated and then mapped to different drives, so the process would be directed to the correct devices containing the object.

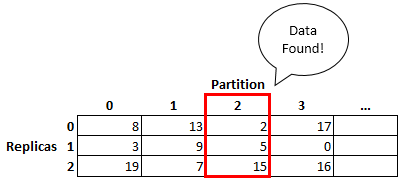

Each row in the Device Look-up Table represents the replicas (replica 0 being the first, 1 being second, and so on). Each column in the device look-up table represents the partition. Each data in the table represents the drive ID. Given that, the process looks at the device where the first replica is located, and then the next n – 1 rows, n being the number of replicas present in the cluster.

Example of device look-up table:

In the table above, we can see that the data was found in partition 2. Replicas 0, 1, and 2 are located in partition 2, which are mapped to the drives 5, 2, and 15.

4.2. Device List

Device List contains a list of active devices in the cluster. If we look more into the Swift architecture and its data models, this will probably make a lot more sense. Each device (which maps the partitions) belongs to the storage node, which in turn belongs to a zone. Each individual zone belongs to a region. That region is a typical geographical location that are user-defined values, when they are prompted to provide values for country, city, (and some others) while creating a Swift cluster. Above all, those regions all fall into one Swift cluster (see Basic Architecture and Data Models of OpenStack Swift for more details)

So the point of a device list is to contain information about each device: what region/zone they fall in, its weight, device ID, etc. I believe that proxy server uses this information to handle the REST calls, and refer the objects from/to the correct devices.

Example of device list:

| 0 | 1 | 2 | 3 | … | |

|---|---|---|---|---|---|

| Devices | device id=0 region=2 zone=1 weight=100 … |

device id=1 region=3 zone=1 weight=100 … |

device id=2 region=1 zone=3 weight=100 … |

device id=3 region=2 zone=2 weight=100 … |

… |

Going back to the earlier example with device look-up table when the proxy server process found the data in partition 2, it also found the first replica of the data was found in partition 2 of the drive 2. So then it will refer to the device list and look at the device 2, see that it is located in region 1, zone 3, and so forth.